When I finished my PhD in 1998 I felt incomplete. I had been doing what I felt was solid research investigating the visual perception involved in interception, with some studies identifying the information sources we use to judge when an approaching object will arrive (the time to contact, TTC) and where it will be when it gets there. I was proud of what I had done. But in all my studies the participants were just pressing buttons! Does this really tell us anything about what people do when the hit a ball, tackle an opponent or drive a car? The experiments I was doing were so passive. I needed to find a way to combine the well-controlled visual stimuli I had developed with a more natural response from the participant.

When I started my post-doc at Nissan Cambridge Basic Research later that year we had lots of fun toys in the lab, including a really nice driving simulator. But the thing that really caught my eye was a small grey box sitting in the back…a Polhemus motion tracker that was being used to monitor the driver’s head movements. I had always wanted to try motion tracking so one week when no one was using the driving simulator I took it out and started playing…

After figuring out how it worked I spent several long hours trying to bring the output from the tracker into the graphics software I was using at the time (Open GL Scene). After much trial and error, I managed to write some code so that the motion tracker could be used to control the paddle in the simple “pong” demo that came with GL Scene (see picture below).

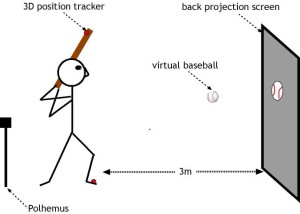

At that moment, my colleague Simon Rushton walked in the room and exclaimed: “Hey, you have made yourself a little VR system!” That was when the bells went off! I could complete the loop! I proceeded to modify the simple pong program. For years, even as the simulator got more and more sophisticated, the file to run it was still called pong.exe! I replaced the little pong ball with a textured-mapped baseball, removed the background, and attached the motion tracking sensor to the end of bat and my simulation was born! Here is diagram I used when I presented my first data from it in 2000 (sadly I didn’t take any photos):

The original simulation had only a ball on a black background. The first batter I brought into the lab found it very difficult to hit this disembodied ball so I added a progress bar to crudly simulate the pitcher’s windup and we were off and running! In my first set of studies with the simulator, I focused on trying to determine how batters actually used the perceptual information I had been investigating in my PhD. For example, I tested the elegant idea put forth by Michael Turvey that a batter could simplify the timing control in batting by initiating a swing with a fixed movement time (say 0.3 s) when the TTC (based on the rate of expansion of the ball) reached that same value. My data didn’t support this as batters seemed to always initiate their swings at a constant time after pitch release, not a constant TTC. My first expertiment also included my first brush with the effects the experimenter can have on the data – discussed in my research confession here.

These early studies also quickly lead me to something that, as a PhD in vision science, I thought I would never touch with a ten foot pole: cognition. In my very first experiment, college baseball players were tasked with trying to hit a set of pitches that varied completely randomly in speed and direction. For example, the pitch speed could randomly have any value between 63 and 85 mph. Even though in this situation there is reliable information the batter could use to judge the TTC and pitch trajectory (the exact one’s I had studied in my PhD), the batters could barely make contact with the ball and became very frustrated! Another common thing that kept occurring was the batter turning around and asking me what the count (i.e., # of balls and strikes) was.

That was when I realized I needed to make my simulation a bit more true to the real thing. A lot of people have asked me over the years why I didn’t just do that in the first place. For example, why didn’t I just try to create a baseball video game and throw everything in there? While I’ll admit part of it was due to my limited skill as a computer programmer, from the start, I had a deliberate plan of slowly adding in elements to my simulation one by one. For me, this is a mistake that a lot of people using research simulations (particularly driving and flight) make. One of the real advantages of a sim is that you can break apart a skill and study it…if you immediately throw in all the complexities of the real action you have lost that and would be better off actually studying the real thing in field studies.

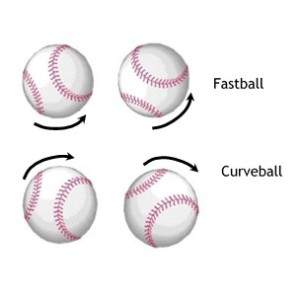

The first adjustment I made to my simulation was to make the virtual pitcher throw a distinct set of pitches (each with a small range in speeds) and add in the appropriate ball rotate cues for different types of baseball pitches e.g., overspin for a curveball and underspin for a fastball:

These simple changes made a huge difference! The batters could now hit effectively in my simulation and no longer wanted to smack me over the head with their bat! It was also clear that something else was happening too: batters were guessing what the next pitch would be based on the sequence of the previous 2-3 pitches. Again, not the behavior I was expecting in a situation where all the perceptual information needed to hit is there.

The final thing I added in this phase was the pitch count (which was displayed to the batter on a virtual scoreboard at the top of the screen) and this again made a huge difference. If my virtual pitcher threw a fastball when the count was 3 balls and no strikes, the batting average of players in my simulation was over .600! This occurred even though the perceptual information was exactly the same as when the batter threw a fastball in an 0-2 count (for which the batting average was around .200). Again, batters were obviously anticipating what the pitch would be before they stepped up to the plate. These effects of pitch count and sequence culminated in a model I developed to explain how these guesses might be generated.

That was it for batting simulation versions 1.0, 1.1 and 1.2. I am still amazed that I had the opportunity to do all this sports research while working as a post-doc for a driving company! It was time to move to a new lab at Arizona State University….

In Batting Simulator 2.0 (shown below) I added a few more features. You could now see the windup of a (very crude) virtual pitcher, and I added a field and a fence.

Another big addition at this stage was visual and auditory feedback. When the batter hit the ball a crack of the bat sound would be played and the virtual ball would fly back out onto the field. I had now completely completed the loop: perception leading to action leading to feedback to adjust perception-action on next at-bat. One interesting challenge I had with feedback was how to let the batter know that had hit a foul ball, and in particular what side of the field the foul ball went. Because of the screen size I could not display balls flying into the crowd. So I took advantage of how the lab was constructed. In the location where the virtual left field stands would be there was a large (covered) window so if the batter hit it there I would play the sound of smashing glass. While, in virtual right field there was large metal door so I played a clanging sound if the ball was hit there. Hey, sometimes you have to improvise.

Very soon after, in version 2.1, I added another motion tracking system so I could record the movements of the batter’s body along with the bat. That was when another unexpected thing happened. I had been recording the movements of the bat and body of one highly experienced player and was starting to come up with a reasonably good biomechanical model of the swing based on the timing of the different events (e.g., the front foot leaving the ground, the bat leaving the shoulder, etc) but I had one set of data from this batter that did not fit with the model at all! After looking at it a bit more closely, I realized that that data was from a session in which I had a couple of the ASU coaches visiting the lab and checking out my sim. I could measure the effects of pressure and a new set of studies was born! As I hope you can see from this story, I have really stumbled upon a lot of research ideas along the way — I didn’t start with a grand plan.

In version 2.2, I added a sleeve to the bat that could be used to make the bat heavier or lighter. This allowed us to study how batter’s adapt their swing to changes in bat weight.

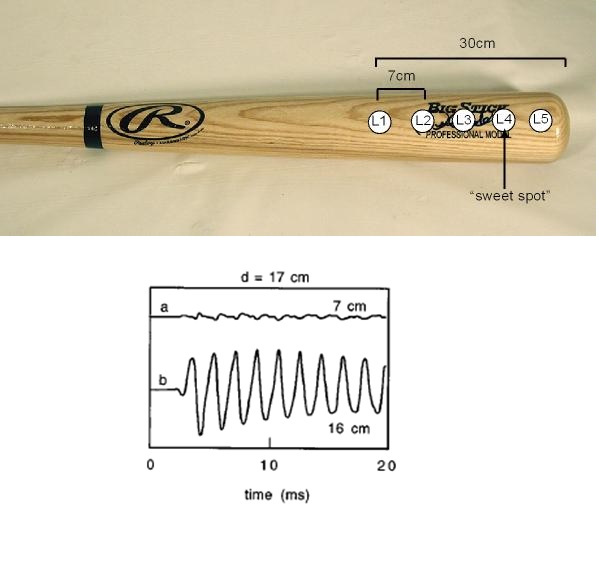

In version 2.3, I worked to address one of the most common complaints I heard from the batters in my studies: “it’s pretty cool but it’s weird that I don’t feel anything when I hit the ball”. To create the tactile feedback, I duct taped a bunch of tactors to create a Franken-bat. The signal sent to the tactors varied in location, frequency and amplitude depending on where the collision was detected. So, for example, you would get virtually no vibration when the ball contacted the sweet spot and a really strong vibration if the ball hit near the handle.

The batters in my studies really liked this addition and I was able to show they actually used the tactile information to adjust the timing of their swing.

At this point my batting simulator was mothballed for a few years during my time in England. I never quite figured out how to get that cricket simulator working! (Seriously, simulating the ball bounce would require something like a CAVE with a projection on the floor and I still doubt it would look right).

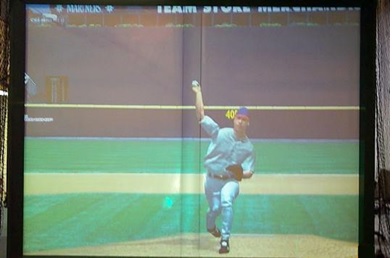

But I am happy to say it will soon be back in action! The big new additions to version 3.0 are wireless motion tracking and a more realistic looking pitcher (modified from an older game):

The most exciting thing about this is that I have taken movement recordings from a real player throwing different pitch types (e.g., fastball, curveball) and incorporated them into the sim which will now have realistic advance cues (e.g., the pitcher’s body language will be related to the type of pitch thrown).

Hope you have enjoyed my simulator story so far. One of things I never could have anticipated is how much I have been able to use it to study more general issues like attentional control, embodied perception, action observation, response inhibition, imagery and memory bias. I really just started out trying to figure out people hit the round white thing with long brown thing 🙂 I hope I have a few more stories to tell about the evolution of my sim…and, heck, I have an Occulus Rift lying around my lab now so version 4.0 might be really something!

Articles:

Behavior of College Baseball Players in a Virtual Batting Task

“Markov at the Bat”: A model of cognitive processing in baseball batters.

Attending to the execution of a complex sensorimotor skill: Expertise differences, choking and slumps

Effects of focus of attention on baseball batting performance in players of different skill level

“As soon as the bat met the ball, I knew it was gone”: Outcome prediction, hindsight bias, and the representation and control of action in novice and expert baseball players.

How do batters use visual, auditory, and tactile information about the success of a baseball swing?

A model of motor inhibition for a complex skill: baseball batting.

Switching tools: Perceptual-motor recalibration to weight changes.

Expert baseball batters have greater sensitivity in making swing decisions

Hitting is contagious: Experience and action induction

A direct comparison of the effects of imagery and action observation on hitting performance

Interactions between performance pressure, performance streaks and attentional focus

Being selective at the plate: Processing dependence between perceptual variables relates to hitting goals and performance

The Moneyball Problem: What is the Best Way to Present Situational Statistics to an Athlete?